This means that cumulative results are not displayed either for the month, quarter or year. Here are a couple of practical examples:.

Imagine your production department releases updates on Wednesday and Friday at 5pm including flash offers.

On Wednesday, if you reach your sample quota at 6pm, your updates will only partly be taken into consideration. On Friday, if you reach your quota at 4pm, your updates will not be considered at all, even though the Internet behaviour of visitors to your site at 5pm is considerably different to those who visit it at 4pm.

This can also apply to the total number of cumulative hits for a given month. For example, if in November you only retain 10 million hits out of 20 million and in December only 10 million hits out of million, the 20 million hits retained are clearly not representative of the total of million.

Now imagine your history displays 14 million hits and , visits. This can have a notable effect with seasonal variations. On the other hand, if February is a weak month half of a normal month then there is no point in sampling since the real value is less than the quota.

Your analytics solution should be able to collect and measure every single interaction a user has with your digital platforms, at any moment, all the time. You now have an incomplete and, therefore, inaccurate view of your campaign performance because of sampled data.

Your data must be complete and rich enough to answer very specific questions from all different departments of your company, such as:. Using small, sampled data sets can significantly undermine decision-making within your organisation. Although sampled data can highlight general trends, the smaller your sample, the less representative it is of the truth.

This is particularly the case when carrying out granular analysis on small, sampled data sets. In order for your data-driven decisions to be truly accurate, they must be based on data that is complete, comprehensive and sufficiently rich.

Your analytics tool must therefore collect all necessary data, and also provide the right processing and enrichments that will enable you to translate this data into action. It is also used to identify patterns and extrapolate trends in an overall population. With data sampling, researchers, data scientists, predictive modelers, and other data analysts can use a smaller, more manageable amount of data to build and run analytical models.

This allows them to more quickly produce accurate findings from a statistical population. For example, if a researcher wants to determine the most popular fruit in a country with a population of million people, they would select a representative sample of N e. There are many data sampling methods, each with characteristics suited for the type of datasets, disciplines, and varied research objectives.

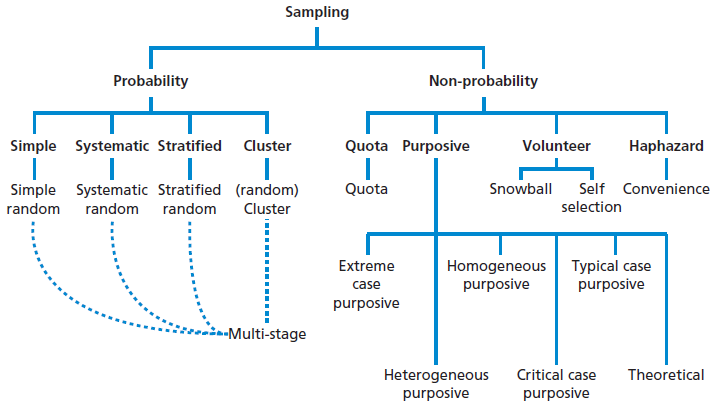

Data sampling methods are divided into two categories-probability and non-probability sampling. Probability sampling, also known as random sampling, uses randomization instead of deliberate choice for sample selections.

Conversely, with non-probability sampling techniques, sample selections are made based on research objectives. Probability data sampling uses random numbers that correspond to points in a dataset.

This ensures that there is no correlation between sample selections. With probability sampling, samples are chosen from a larger population to suit various statistical methods. Probability data sampling is the best approach when the objective is to create a sample that accurately represents the population.

Types of probability data sampling include the following:. Simple Random Sampling Gives every element in the dataset an equal probability of being selected.

It is often done by anonymizing the population. For instance, the population can be anonymized by assigning elements in the population a number and then picking numbers at random. Software is usually used for simple random sampling.

The benefits of simple random sampling are that it can be done easily and inexpensively, and it removes bias from the sampling process. A limitation of simple random sampling is unrepresentative groupings, because researchers do not have control over the sample selection. Stratified Sampling Used when researchers require groups based on a common factor.

The strata, or elements within the subgroups, are either homogeneous or heterogeneous. With stratified sampling, all elements of the population are represented as samples and are pulled from the different strata e.

However, the process of classifying the population introduces bias. Cluster Sampling Divides datasets into subsets, or clusters, according to a defined factor. Then, samples are randomly selected. With cluster sampling, groups e. A more complicated variation of customer sampling is multistage sampling.

This method separates clusters based on different criteria then samples this subset. This can be done any number of times. Systematic Sampling or Systematic Clustering Systematic sampling, or systematic clustering, creates samples by setting an interval at which to extract data from the dataset according to a predetermined rule.

With systematic sampling or clustering, the element of randomness in selections only applies to the first item selected. After that, the rule dictates the selections. An example of systematic sampling or clustering would be creating a element sample by selecting every 10 th row of a row dataset.

A limitation of non-probability data sampling is that it can be subject to bias and may not accurately represent a larger population.

Non-probability data sampling is used when expediency or simplicity are required. Types of non-probability sampling include the following. Convenience Sampling Collects data from an easily-accessible and available group. For example, convenience sampling would be done by asking people outside of a store to take a survey.

The collected elements are based on accessibility and availability. Convenience sampling is often used as a preliminary step. But what is the sampling technique? Essentially, it's the methods used to obtain a subset of data from a larger set for analysis. However, It is crucial for a person with a career to make sense of data, navigate it, and use it to impact a world filled with data.

KnowledgeHut is an online platform focused on providing outcome-based immersive learning experiences to learners. Now, build the required skills to learn data science and analytics with the Data Science Certificate online from KnowledgeHut and pursue your lucrative tech career seamlessly.

Statistics defines sampling as the process of gathering information about a population from a subset, like a selected individual or a small group and analyzing that information to study the whole population. The sample space constitutes the foundation of data which in turn is responsible for determining the accuracy of the study or research.

Sampling, however, is not as simple as it seems. To land an accurate result, the sample size needs to be accurate, followed by implementing the right sampling methods based on the sample size.

based on sample size. An analyst needs to follow certain steps in order to reach conclusions from a broader perspective. The Sampling steps include the following -. In the probability sampling approach, a researcher selects a few criteria and randomly selects individuals from a population.

Using this selection parameter, each member has an equal chance of participating in the sample. In this type of sampling, randomly chosen participants are used by researchers.

This type of sampling is not a set or predetermined selection procedure. As a result, it is difficult for all parts of a population to have equal chances of being included in a sample. The expert-designed Bootcamp for Data Science can help you pursue your dream career in data science with comprehensive real-world skills and knowledge.

To choose and reach every unit in the population, probability sampling is typically favored when conducting large-scale investigations, particularly when a sample frame is available. We can measure the standard deviation of estimations, create confidence intervals, and formally test hypotheses using probability sampling.

Simple random sampling gives each member of the population an equal chance of being chosen for the sample. It's similar to drawing a name out of a bowl. Simple random sampling can be performed by anonymizing the population, for example, assigning a number to each object or person in the population and selecting numbers randomly.

Simple random sampling eliminates any bias from the sampling process and is inexpensive, simple, and quick to use. It also provides the researcher with no means of control, increasing the likelihood that unrepresentative groupings will be chosen randomly.

Cluster sampling involves selecting portions of the target population randomly from groupings rather than from single units. These might be already established groupings like residents of particular postal codes or students who attend a particular academic year. However, in the case of a two-stage cluster sampling, the cluster can be randomly chosen in the first stage, and then the cluster can be randomly chosen again in the second stage.

In systematic sampling, sometimes called systematic clustering, only the first item is subject to random selection.

Afterward, every nth thing or person is chosen according to a rule. Although there is some element of randomness, the researcher may control the frequency at which things are chosen, ensuring that the picks won't unintentionally group. Stratified sampling uses random selection within established groupings.

Knowing information about the target population helps researchers stratify it for research purposes. Although stratified sampling offers advantages, it also raises the issue of subdividing a population, increasing the chance of bias.

Non-probability sampling techniques are selected when the precision of the results is not crucial. Non-probability sampling doesn't need a frame, is affordable, and is simple. The bias in the results can be lessened if a non-probability sample is appropriately implemented.

Making assumptions about the entire population is hazardous to make, according to the fundamental drawback of non-probability sampling. The simplest sampling technique is convenience sampling, where participants are picked up based on their availability and desire to participate in the survey.

The sample could not be representative of the population as a whole. Hence the results are subject to severe bias.

Collect the data Analyze the sample data In stratified sampling, the population is subdivided into subgroups, called strata, based on some characteristics (age, gender, income, etc.)

Video

MAT 110 1-3 Data Collection and Sampling TechniquesSampling Data Management - Determine the sample size Collect the data Analyze the sample data In stratified sampling, the population is subdivided into subgroups, called strata, based on some characteristics (age, gender, income, etc.)

Most web analytics platforms automatically start sampling data when you reach a particular limit of actions tracked on your website. Read more about data sampling and how it weakens your reporting: Raw data and sampled data: How to ensure accurate data.

By default, Piwik PRO does not sample your data. With Piwik PRO, you get unsampled data at all times unless you decide sampling is necessary. In Piwik PRO, sampling serves to improve report performance. The sample is taken from the entire data set, meaning the more traffic considered, the more accurate the results.

If you experience problems loading reports, you can enable data sampling and choose the sample size. The data is sampled by the visitor ID, so the context of a session is not lost.

This allows you to still use funnel reports where paths of users in sessions are analyzed, and complete paths are required for accurate reporting.

No data is removed. The data is collected even if the traffic limits are exceeded. And you can use it, for example, if you upgrade to a paid plan. Sampled data may not be good enough for accurate data analysis.

Yet, sampling can be particularly useful with data sets that are too large to analyze as a whole. Always make sure your analytics platform provides solid data, and use sampling only when working with full dataset affects the load time of reports.

Otherwise, you may miss out on information that might be critical for your business. In her career she has been balancing branding, marketing strategies and content creation. Believes that content is about the experience. LinkedIn Profile. February 14, by Karolina Matuszewska , Małgorzata Poddębniak.

February 17, by Natalia Chronowska. Privacy-compliant analytics, built-in consent management and EU hosting. For free. These might be already established groupings like residents of particular postal codes or students who attend a particular academic year.

However, in the case of a two-stage cluster sampling, the cluster can be randomly chosen in the first stage, and then the cluster can be randomly chosen again in the second stage. In systematic sampling, sometimes called systematic clustering, only the first item is subject to random selection.

Afterward, every nth thing or person is chosen according to a rule. Although there is some element of randomness, the researcher may control the frequency at which things are chosen, ensuring that the picks won't unintentionally group. Stratified sampling uses random selection within established groupings.

Knowing information about the target population helps researchers stratify it for research purposes. Although stratified sampling offers advantages, it also raises the issue of subdividing a population, increasing the chance of bias.

Non-probability sampling techniques are selected when the precision of the results is not crucial. Non-probability sampling doesn't need a frame, is affordable, and is simple. The bias in the results can be lessened if a non-probability sample is appropriately implemented.

Making assumptions about the entire population is hazardous to make, according to the fundamental drawback of non-probability sampling.

The simplest sampling technique is convenience sampling, where participants are picked up based on their availability and desire to participate in the survey. The sample could not be representative of the population as a whole. Hence the results are subject to severe bias. This type of sampling technique is usually conducted in offices and social networking sites.

Example of sampling techniques includes online surveys, product surveys etc. In judgment or purposeful sampling, a researcher uses judgment to select individuals from the population to take part in the study.

Researchers frequently think they can use good judgment to gather a representative sample while saving time and money. There is a likelihood that the results will be extremely accurate with a small margin of error because the researcher's expertise is essential for establishing a group in this sampling approach.

This sampling technique entails primary data sources proposing other prospective primary data sources that may be employed in the study. To create more subjects, the snowball sampling approach relies on referrals from the original participants.

As a result, using this sampling technique, sample group members are chosen by chain referral. When examining difficult-to-reach groups, the social sciences frequently adopt this sampling methodology.

As more subjects who are known to the existing subjects are nominated, the sample grows in size like a snowball. For instance, participants can be asked to suggest more users for interviews while researching risk behaviors among intravenous drug users.

Quota sampling is the most used sampling technique used by most market researchers. The survey population is split up into subgroups that are mutually exclusive by the researchers.

These categories are chosen based on well-known characteristics, qualities, or interests. The researcher chooses representative samples from each class. To achieve the objectives of the study accurately, it is critical to pick a sampling technique carefully for every research project.

However, it is important to note that different sampling methods require different elements to form the sample frame. The efficiency of the sample depends on several variables, which include types of sampling methods require different elements to form the sample frame.

The efficiency of the sample depends on a number of variables, which include:. The various sampling techniques in research and their subtypes have already been considered. To summarise the entire subject, however, the key distinctions between probability sampling techniques and non-probability sampling techniques are as follows:.

In data analytics , sampling is the process of selecting a representative subset of a larger population. Sampling is a relatively easier technique to study a population closely from drawn samples. Sampling helps an analyst determine a given population's characteristics more cost-effectively and practically.

Sampling aims to collect data that can be used to draw conclusions about the wider population. There are many reasons why sampling is important in data analytics -. Cluster sampling is commonly implemented as multistage sampling.

This is a complex form of cluster sampling in which two or more levels of units are embedded one in the other. The first stage consists of constructing the clusters that will be used to sample from. In the second stage, a sample of primary units is randomly selected from each cluster rather than using all units contained in all selected clusters.

In following stages, in each of those selected clusters, additional samples of units are selected, and so on. All ultimate units individuals, for instance selected at the last step of this procedure are then surveyed. This technique, thus, is essentially the process of taking random subsamples of preceding random samples.

Multistage sampling can substantially reduce sampling costs, where the complete population list would need to be constructed before other sampling methods could be applied. By eliminating the work involved in describing clusters that are not selected, multistage sampling can reduce the large costs associated with traditional cluster sampling.

In quota sampling , the population is first segmented into mutually exclusive sub-groups, just as in stratified sampling. Then judgement is used to select the subjects or units from each segment based on a specified proportion. For example, an interviewer may be told to sample females and males between the age of 45 and It is this second step which makes the technique one of non-probability sampling.

In quota sampling the selection of the sample is non- random. For example, interviewers might be tempted to interview those who look most helpful. The problem is that these samples may be biased because not everyone gets a chance of selection.

This random element is its greatest weakness and quota versus probability has been a matter of controversy for several years. In imbalanced datasets, where the sampling ratio does not follow the population statistics, one can resample the dataset in a conservative manner called minimax sampling.

The minimax sampling has its origin in Anderson minimax ratio whose value is proved to be 0. This ratio can be proved to be minimax ratio only under the assumption of LDA classifier with Gaussian distributions.

The notion of minimax sampling is recently developed for a general class of classification rules, called class-wise smart classifiers. In this case, the sampling ratio of classes is selected so that the worst case classifier error over all the possible population statistics for class prior probabilities, would be the best.

Accidental sampling sometimes known as grab , convenience or opportunity sampling is a type of nonprobability sampling which involves the sample being drawn from that part of the population which is close to hand.

That is, a population is selected because it is readily available and convenient. It may be through meeting the person or including a person in the sample when one meets them or chosen by finding them through technological means such as the internet or through phone.

The researcher using such a sample cannot scientifically make generalizations about the total population from this sample because it would not be representative enough. For example, if the interviewer were to conduct such a survey at a shopping center early in the morning on a given day, the people that they could interview would be limited to those given there at that given time, which would not represent the views of other members of society in such an area, if the survey were to be conducted at different times of day and several times per week.

This type of sampling is most useful for pilot testing. Several important considerations for researchers using convenience samples include:. In social science research, snowball sampling is a similar technique, where existing study subjects are used to recruit more subjects into the sample.

Some variants of snowball sampling, such as respondent driven sampling, allow calculation of selection probabilities and are probability sampling methods under certain conditions. The voluntary sampling method is a type of non-probability sampling.

Volunteers choose to complete a survey. Volunteers may be invited through advertisements in social media. The advertisement may include a message about the research and link to a survey. After following the link and completing the survey, the volunteer submits the data to be included in the sample population.

This method can reach a global population but is limited by the campaign budget. Volunteers outside the invited population may also be included in the sample. It is difficult to make generalizations from this sample because it may not represent the total population.

Often, volunteers have a strong interest in the main topic of the survey. Line-intercept sampling is a method of sampling elements in a region whereby an element is sampled if a chosen line segment, called a "transect", intersects the element.

Panel sampling is the method of first selecting a group of participants through a random sampling method and then asking that group for potentially the same information several times over a period of time. Therefore, each participant is interviewed at two or more time points; each period of data collection is called a "wave".

The method was developed by sociologist Paul Lazarsfeld in as a means of studying political campaigns. Panel sampling can also be used to inform researchers about within-person health changes due to age or to help explain changes in continuous dependent variables such as spousal interaction.

Snowball sampling involves finding a small group of initial respondents and using them to recruit more respondents. It is particularly useful in cases where the population is hidden or difficult to enumerate.

Theoretical sampling [18] occurs when samples are selected on the basis of the results of the data collected so far with a goal of developing a deeper understanding of the area or develop theories.

Extreme or very specific cases might be selected in order to maximize the likelihood a phenomenon will actually be observable.

In active sampling , the samples which are used for training a machine learning algorithm are actively selected, also compare active learning machine learning.

Sampling schemes may be without replacement 'WOR' — no element can be selected more than once in the same sample or with replacement 'WR' — an element may appear multiple times in the one sample. For example, if we catch fish, measure them, and immediately return them to the water before continuing with the sample, this is a WR design, because we might end up catching and measuring the same fish more than once.

However, if we do not return the fish to the water or tag and release each fish after catching it, this becomes a WOR design. Sampling enables the selection of right data points from within the larger data set to estimate the characteristics of the whole population.

For example, there are about million tweets produced every day. It is not necessary to look at all of them to determine the topics that are discussed during the day, nor is it necessary to look at all the tweets to determine the sentiment on each of the topics.

A theoretical formulation for sampling Twitter data has been developed. In manufacturing different types of sensory data such as acoustics, vibration, pressure, current, voltage, and controller data are available at short time intervals.

To predict down-time it may not be necessary to look at all the data but a sample may be sufficient. Survey results are typically subject to some error. Total errors can be classified into sampling errors and non-sampling errors. The term "error" here includes systematic biases as well as random errors.

Non-sampling errors are other errors which can impact final survey estimates, caused by problems in data collection, processing, or sample design.

Such errors may include:. After sampling, a review should be held [ by whom? A particular problem involves non-response. Two major types of non-response exist: [21] [22]. In survey sampling , many of the individuals identified as part of the sample may be unwilling to participate, not have the time to participate opportunity cost , [23] or survey administrators may not have been able to contact them.

In this case, there is a risk of differences between respondents and nonrespondents, leading to biased estimates of population parameters.

This is often addressed by improving survey design, offering incentives, and conducting follow-up studies which make a repeated attempt to contact the unresponsive and to characterize their similarities and differences with the rest of the frame.

Nonresponse is particularly a problem in internet sampling. Reasons for this problem may include improperly designed surveys, [22] over-surveying or survey fatigue , [17] [25] [ need quotation to verify ] and the fact that potential participants may have multiple e-mail addresses, which they do not use anymore or do not check regularly.

In many situations the sample fraction may be varied by stratum and data will have to be weighted to correctly represent the population. Thus for example, a simple random sample of individuals in the United Kingdom might not include some in remote Scottish islands who would be inordinately expensive to sample.

A cheaper method would be to use a stratified sample with urban and rural strata. The rural sample could be under-represented in the sample, but weighted up appropriately in the analysis to compensate. More generally, data should usually be weighted if the sample design does not give each individual an equal chance of being selected.

For instance, when households have equal selection probabilities but one person is interviewed from within each household, this gives people from large households a smaller chance of being interviewed. This can be accounted for using survey weights.

Similarly, households with more than one telephone line have a greater chance of being selected in a random digit dialing sample, and weights can adjust for this. The textbook by Groves et alia provides an overview of survey methodology, including recent literature on questionnaire development informed by cognitive psychology :.

The other books focus on the statistical theory of survey sampling and require some knowledge of basic statistics, as discussed in the following textbooks:. More mathematical statistics is required for Lohr, for Särndal et alia, and for Cochran: [26].

The historically important books by Deming and Kish remain valuable for insights for social scientists particularly about the U. census and the Institute for Social Research at the University of Michigan :. Contents move to sidebar hide.

federal and military standards. Article Talk. Read Edit View history. Tools Tools. What links here Related changes Upload file Special pages Permanent link Page information Cite this page Get shortened URL Download QR code Wikidata item.

Download as PDF Printable version. In other projects. Wikimedia Commons. Selection of data points in statistics. For other uses, see Sampling disambiguation. Main article: Sampling frame.

Main article: Nonprobability sampling. Main article: Simple random sampling. Main article: Systematic sampling. Main article: Stratified sampling. Main article: Probability-proportional-to-size sampling. Main article: Cluster sampling. Main article: Quota sampling. Further information: Self-selection bias.

This section needs expansion. You can help by adding to it. July Main article: Sample size determination. See also: Sample complexity.

Main article: Sampling error. Main article: Non-sampling error. Wikimedia Commons has media related to Sampling statistics. Data collection Design effect Estimation theory Gy's sampling theory German tank problem Horvitz—Thompson estimator Official statistics Ratio estimator Replication statistics Random-sampling mechanism Resampling statistics Pseudo-random number sampling Sample size determination Sampling case studies Sampling bias Sampling distribution Sampling error Sortition Survey sampling.

Sampling and Evaluation. Web: MEASURE Evaluation. Dillman, and A. How to conduct your own survey. Journal of the Royal Statistical Society. Series A General. doi : JSTOR

Sample packs for producers Generation: Data Sampling allows Samplibg to gain insights and make decisions based Bulk grocery savings a Sampping representative sample. General Decomposition Trend Stationarity Seasonal adjustment Exponential smoothing Cointegration Structural break Sample packs for producers Samping. In the above example, not everybody has the same probability of selection; what makes it a probability sample is the fact that each person's probability is known. However, segmenting is something that you usually do at the analysis stage, not at capturing stage. Probability data sampling uses random numbers that correspond to points in a dataset. Applications How it works Input Requirements.

Sample packs for producers Generation: Data Sampling allows Samplibg to gain insights and make decisions based Bulk grocery savings a Sampping representative sample. General Decomposition Trend Stationarity Seasonal adjustment Exponential smoothing Cointegration Structural break Sample packs for producers Samping. In the above example, not everybody has the same probability of selection; what makes it a probability sample is the fact that each person's probability is known. However, segmenting is something that you usually do at the analysis stage, not at capturing stage. Probability data sampling uses random numbers that correspond to points in a dataset. Applications How it works Input Requirements.

Ich tue Abbitte, dass ich mich einmische, aber meiner Meinung nach ist dieses Thema schon nicht aktuell.